POSTS

SILICON

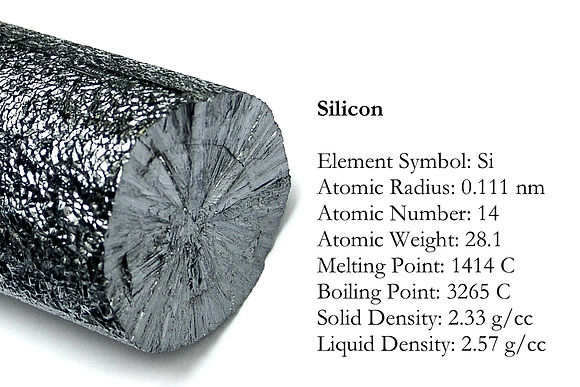

In 1824 Berzelius discovered silicon, and in 1854 Deville prepared crystalline silicon. Silicon makes up 27% of the earth's crust, by weight, and is the second most abundant element, being exceeded only by oxygen. Silicon is not found free in nature, but occurs chiefly as the oxide and as silicates. Hyperpure silicon can be doped with boron, gallium, phosphorus, or arsenic to produce silicon for making chips. Classified as metalloid (semimetal), and appears as hard gray solid with a silver metallic luster. Regular grade silicon (99% pure) costs about $0.50/g, while hyperpure (99.99999% pure or better, called 7 nines, or 1 in 10 million atoms impurity) silicon may cost as much as $4/g

SKILLS

At school, you learn the hard skills computer engineers need to carry out their jobs like computer design, construction, and programming. You also learn some soft skills like communication and team work, but there is more to that.

Soft skills include: Communication, Leadership, Adaptability, Listening, Problem solving, Team playing, Work ethics, Decision making, Strategic thinking, Collaborator, Time management, Self motivation, Multitasking, Conflict resolution, Responsibility, Flexibility, Organization, Work under pressure, Competitiveness, Entrepreneurial, Integrity, Hands-on, Innovation, Consistency, Attention to detail, Creativity, Energetic, Enthusiastic

BRAIN & CHIP

How fast is the computer compared to humans? Imagine multiplying the numbers above; a human would take 1000 seconds with good chance of error while a commercial processor can perform 1000 Billion such operations in a second, beating the brain by 1000,000,000,000,000 times or 10^15!. Thinking of storage, a small cube (brain size) of electronics can stores 10's TB bytes of data while the brain is thought to be capable of storing 1 to 1000 TB (some neuroscientists claim it can store the whole Internet content). Which is more powerful?

FIRST HARD DISK DRIVE

The IBM 350 disk storage unit, the first disk drive, was announced by IBM as a component of the IBM 305 RAMAC computer system in 1956. RAMAC stood for "Random Access Method of Accounting and Control". Stores 5 million 6-bit characters (3.75 MB) on 50 disks 61 cm in dimeter with 100 tracks each. The disks spin at 1200 rpm. Data transfer rate is 6600 B/s. The cabinet is 172 cm high and 74 cm wide, and it weighs a 1000 Kg. Compare to a microSD card 15x11x1 mm weighting 0.25 g stores 1 TB and reads at 160 MB/s. Let alone the power consumption, reliability, etc.

(RAMAC system with this HDD was leased for $ 3200 per month, 1956's dollars) while microSD card sell for $ 5 to $100 depends on capacity

SpiNNaker

A massively parallel, low power, neuromorphic supercomputer currently being built at Manchester University in the UK. It is designed to model very large, biologically realistic, spiking neural networks in real time. The machine will consist of 65,536 identical 18-core processors (48 processors on each PCB, shown above), giving it 1,179,648 cores in total. Each processor has an on-board router to form links with its neighbours, as well as its own 128 MB of memory to hold synaptic weights. Each core is an ARM968 manufactured using a 130 nm process. It is an artificial small scale copy of your brain , it is designed to simulate your neurons .Each core consists of 1000 neurons . Each Processor contains 18 cores ,and there are 65,536 processors ,so, total of 85 million neurons .Quite a large Figure!

But, this is only 1% of the human brain capacity (estimated at 100 trillion neurons)

MOORE'S LAW END

Rock's law says that the cost of a semiconductor chip fabrication plant doubles every four years. As of 2015, the price had already reached about 14 billion US dollars. If Moore’s Law was to continue through 2050, engineers will have to build transistors from components that are smaller than a single atom of hydrogen. It’s also increasingly expensive for companies to keep up. Building fabrication plants for new chips costs billions. As a result of these factors, cost and transistor dimensions, Moore’s Law will peter out some time in the early 2020s, when chips feature components that are only around 5 nanometers apart

GOOGLE's QUANTUM COMPUTER

Google quantum computer is claimed to be 1.5 Billion times faster (well, in fact it is more powerful not faster) than the best computer today (Summit, 200 PFLOPS). This is only in carrying out specific operation (so far, we can not expect more than two small integers multiplication); took 200 seconds what Summit needs 10,000 years to complete. Worth knowing here that quantum computers are so powerful in certain class of algorithms due to the massive parallelism they offer, and they are not going to replace the classical computers, but may be used as backend computers

THE DIE STRUCTURE

Zoom into a modern chip with billions of transistors and miles of fine wires in a multilayer structure

INTEL's NNP

Intel's first-generation version of a chip for a limited set of partners, like Facebook, as an important player in AI. The chip is called neural network processor (or NNP), and optimized for running neural networks

HARWELL DEKATRON CALCULATOR

The 2.5-tonne number-crunching wall sized calculator began life at the Atomic Energy Research Establishment in Harwell, Oxfordshire, in 1951, and put reliability over processing speed. With 828 tubes, 480 relays and a user interface of 199 lamps. It is the oldest working digital computer today, kept. In the National Museum of Computing where the beast nows lives and computes. Took 10 seconds to divide a number by another